Icebergs ahead; ‘Give it a go’ isn’t good enough when deploying Copilot for Microsoft 365

One of the big players on the scene is Microsoft’s AI offering, Copilot for Microsoft 365. This powerhouse integrates with well-known and loved applications including Outlook, Word, Excel, PowerPoint, OneDrive and more. Copilot promises and delivers on infinite possibilities

Organisations and users are eager to deploy Copilot for Microsoft 365. Here comes the ‘but’...

Too many organisations, according to QA’s Technical Learning Consultant Phil Crayford, may be considering a ‘give it a go’ methodology for their roll-out of Copilot for Microsoft 365.

If that sounds like you, ‘be aware there are dangers of Copilot for Microsoft 365 icebergs ahead!’ Phil warns. Read on to discover how the right skills, paired with Copilot’s integrated features, can help you steer around the potential pitfalls of poor deployment, and become unsinkable...

Sensitive data and governance

The tip of said iceberg is the actual ‘deployment’ of Copilot for Microsoft for 365, which simply involves assigning Copilot for Microsoft 365 licenses.

A major risk lies hidden beneath the surface; a risk of inadvertently exposing sensitive information to users.

Here’s a worrying example. When a user enters a prompt like ‘What’s the salary of a CEO?’ Copilot will search all the available information for that user. If documents containing salary and bonus information for the CEO of their business are not correctly protected then Copilot could return this in its response, revealing sensitive information.

It’s important to note: Copilot for Microsoft 365 will never expose data the user does not already have permission to access.

However, it may surface information that was accessible all along, while the user was unaware they had access to it.

The problem lies not with Copilot functionality, but in your existing data and privacy structures. If you aren’t confident that sensitive information is appropriately under lock and key, Copilot has the potential to put such flaws under a harsh spotlight.

All AI tools will operate in the context of regulations such as GDPR. Hasty Copilot for Microsoft 365 deployment runs the risk of violating laws or regulations.

This means that ‘compliance checks, privacy considerations, and legal reviews are essential before widespread adoption’ according to Phil.

Thankfully, Microsoft already provide a robust and mature Microsoft Information Protection framework covering the following important steps:

- Know your data

- Protect your data

- Prevent data loss

- Govern your data

Microsoft 365 also has your back. Numerous tools and features seamlessly integrate with Copilot for Microsoft 365 to automatically and dynamically recognise, classify and protect sensitive information.

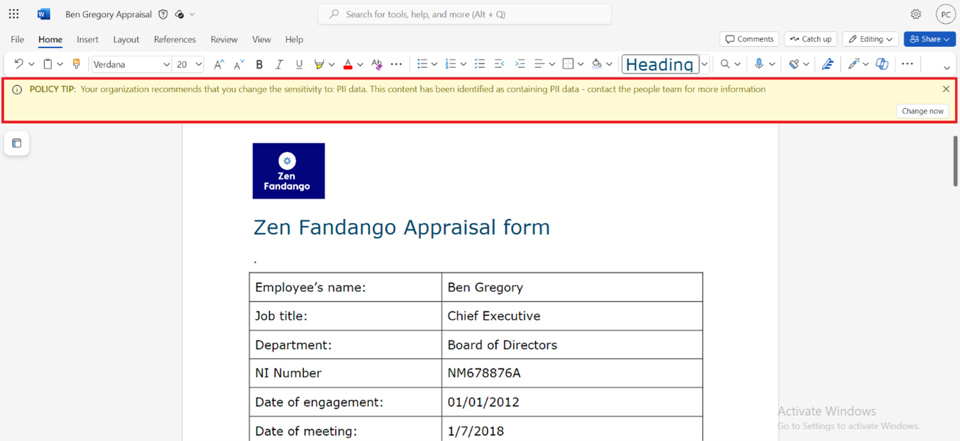

For instance, ‘policy tips’ will flag when information such as PII data is present in your content, and advise that you change the sensitivity setting of the document accordingly.

When generating new content using various types of data, Copilot for Microsoft 365 will automatically apply the correct sensitivity protection and classification. Effectively, this places a safety net around the availability of data, and human error.

Once your content has the correct sensitivity protection assigned, Copilot will respect the classifications when responding to Copilot for Microsoft 365 users; granting or denying access as appropriate.

So, sounds like it’s covered, right? Actually, there are a few key steps organisations need to take in preparation for Copilot for Microsoft 365, to ensure it can work its magic.

To ensure that a Copilot for Microsoft 365 deployment does not compromise the security and privacy requirements of information, administrators will need to understand all the available tools and features within Microsoft 365 to automate the process of:

- Classification of sensitive information

- Discovery of sensitive information

- Protection of sensitive information

- Data loss prevention

- Governance of sensitive information (retention, review and disposal)

Most organisations want to be able to detect when Copilot and other AI tools are being used within their workforce, especially when prompts involve sensitive information. Microsoft provide an AI hub that presents analytics and insights, allowing organisations to review their internal AI interactions:

Microsoft 365 also provides administrators with tools to retain and review interactions to ensure AI tools are not being misused:

So, we’ve established that the risks surrounding the exposure of sensitive information via Copilot are not something to worry about – as long as your deployment is thorough, and your stakeholders are appropriately skilled.

Phil’s take-away is this:

‘I would strongly advise organisations and administrators to invest in learning and deploying the available Microsoft 365 compliance tools to classify, discover and protect sensitive information, before deploying Copilot for Microsoft 365.’

If you’re ready to build the foundation of skills for a safe and successful rollout of Copilot for Microsoft 365, learn more about our QA authored, Microsoft-endorsed course, Mastering Copilot for Microsoft 365 Readiness, Deployment and Management for IT Professionals.